A lot has been said about Go being both a great general purpose and low-level systems language, but one of its key strengths is its built-in concurrency model and tools. Other languages have third-party libraries, but having concurrency baked in it from the start is where Go really shines.

Apart from that, Go nerfs other languages in this context as it has a robust set of tools to test and build concurrent, parallel, and distributed code.

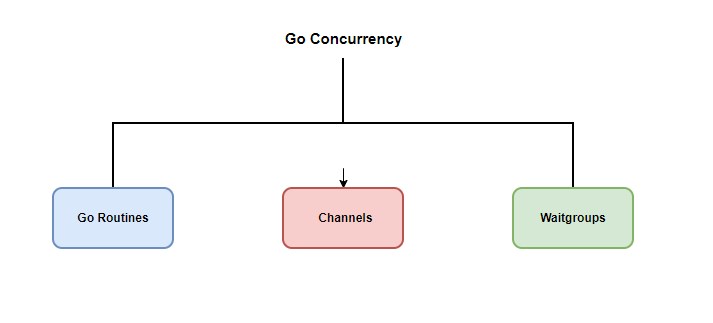

Go’s concurrency model has three important elements: go routines, channels, and waitgroups. We’ll look at these different pillars step-by-step so that we can gain an understanding on how they make our code more efficient.

Goroutines

This is the primary method of handling concurrency in Go. They are defined, created, and executed using the go keyword followed by a function name or an anonymous function. The go keyword makes the function call return immediately while the function runs in the background as a goroutine as the program continues its execution.

However, you cannot control the execution order of your goroutines because this depends entirely on the scheduler of your operating system, the Go scheduler, and its load.

Take for example this block of code:

func function() {

for i := 0; i < 10; i++ {

fmt.Println(i)

}

}

func main() {

go function()

go func() {

for i := 20; i < 40; i++ {

fmt.Println(i, " ")

}

}()

time.Sleep(1 * time.Second)

fmt.Println()

}

The code starts executing function() as a goroutine. After that, the program continues its execution, while function() executes in the background. The second function is an anonymous function. You can create multiple goroutines using a for loop as we’ll see later on.

Executing the code three times gives you the following output:

$ go run routine.go

20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 0123456789

$ go run routine.go

20 21 22 23 24 25 012345678926 27 28 29 30 31 32 33 34 35 36 37 38 39

$ go run routine.go

20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 0123456737 38 39 89

As you can see, the output is not always the same. This supports the fact that you cannot always control the order in which your goroutines will be executed unless you write specific code for this to occur. This is done using signal channels.

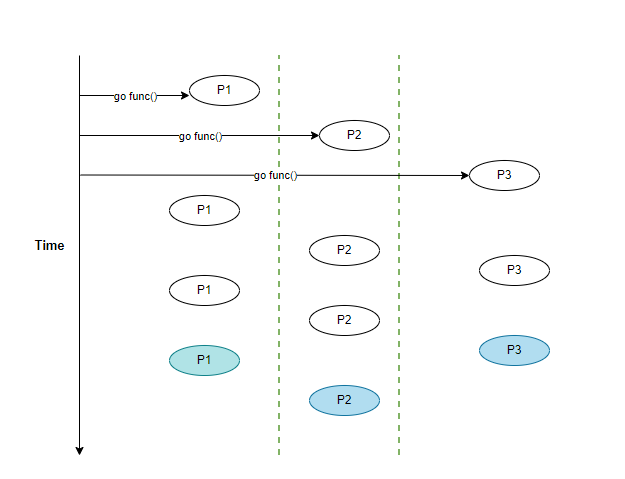

To get a better overview of what’s happening here, let’s look at this diagram, for example:

The y-axis denotes the time complexity the program takes to juggle between processes.

In this case, we have three separate processes (P1, P2, P3). Let’s say you’re working with an API and you send a request with P1: as it is waiting for a response, it uses the extra computing resources to work with P2 and subsequently, P3. When P3 is complete —as shown by the blue-fill in its shape— the resources now bounce between P1 and P2. When P1 is complete, P2 will consequently be marked as complete and use all the computing resources allocated to the program in the beginning.

Multiple Goroutines

Let’s consider the following code:

func main() {

n := flag.Int("n", 10, "Number of goroutines")

flag.Parse()

count := *n

fmt.Printf("Creating %d goroutines\n", count)

for i := 0; i < count; i++ {

go func(x int) {

fmt.Printf("%d ", x)

}(i)

}

time.Sleep(time.Second)

fmt.Println("\nExiting...")

}

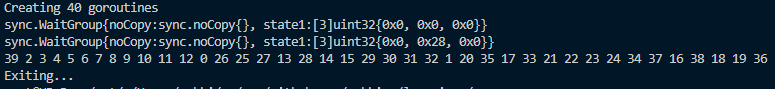

Running the code at least two times gives you the following output:

$ go run multiple.go

Creating 10 goroutines

9 4 5 6 3 8 7 1 0 2

Exiting...

$ go run multiple.go

Creating 10 goroutines

9 0 1 2 3 4 5 6 7 8

Exiting...

Once again you can see that the output is unpredictable in the sense that you would have to search the output to find what you are looking for. A suitable delay in the time.Sleep() call is essential to be able to see the output of the code. We’ll work with time.Second() for now, but as our code grows, this can be disastrous. Let’s look at how to prevent this.

Letting your goroutines finish

This part is about preventing our main() function from ending while it is waiting for the goroutines to finish.

We’ll continue working with the preceding code but add the sync package:

func main() {

n := flag.Int("n", 10, "Number of goroutines")

flag.Parse()

count := *n

fmt.Printf("Creating %d goroutines\n", count)

var waitGroup sync.WaitGroup

fmt.Printf("%#v\n", waitGroup)

for i := 0; i < count; i++ {

waitGroup.Add(1)

go func(x int) {

defer waitGroup.Done()

fmt.Printf("%d ", x)

}(i)

}

fmt.Printf("%#v\n", waitGroup)

waitGroup.Wait()

fmt.Println("\nExiting...")

Oh wait, where are my manners?😄 I’ve belligerently introduced a new concept without covering it first. Don’t worry, I’ll get to it later on in the post.

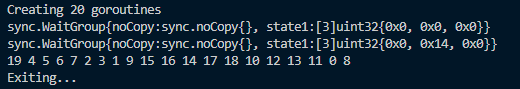

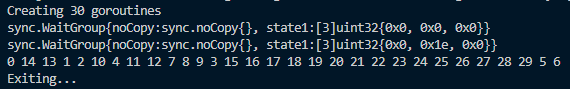

Executing the code will give you the following type of output:

Again, the output varies from execution to execution, especially when dealing with a large number of goroutines. This is acceptable most of the time, but not desired at times.

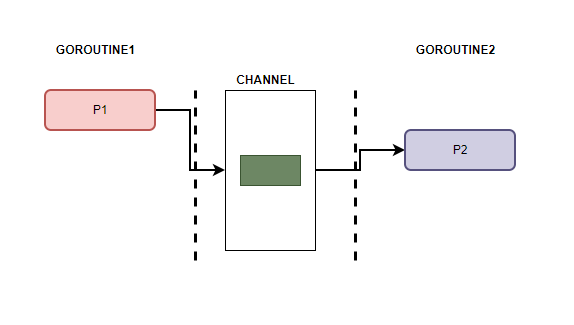

Channels

So far, we’ve worked with concurrent processes (goroutines) that are capable of doing quite a bit but they are not communicating with each other. Essentially, you have two processes occupying the same processing time and space and you must have a way of knowing which process is in which place as part of a larger task. This is where channels come in.

A channel is a communication mechanism that allows goroutines to exchange data among other things. The restrictions are that channels allow exchanges between data of a particular type also known as the element type of the channel. Secondly, for effective operation of the channel, it needs someone to receive what is sent. Kind of like the basic mechanism of a communication process with encoding, decoding, and whatnot.

Working with channels

Consider the following scenario, writing the value of x to channel c is as easy as c <- x. The arrow shows the direction the communication is heading.

Let’s look at this in code:

func runLoopSend(n int, ch chan int) {

for i := 0; i < n; i++ {

ch <- i

}

close(ch)

}

func runLoopReceive(ch chan int) {

for {

i, ok := <-ch

if !ok {

break

}

fmt.Println("Received value: ", i)

}

}

func main() {

myChannel := make(chan int)

go runLoopSend(10, myChannel)

go runLoopReceive(myChannel)

time.Sleep(2 * time.Second)

}

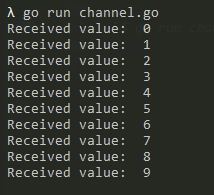

The chan keyword is used to declare that ch is a channel and is of type int. The ch <- i allows you to write the value of i to ch and the close() function closes the channel thus making any writing operation to it impossible. In the main function, we define the myChannel variable that will enable the runLoopReceive the loop contents of the first function. We close the function by giving it enough time to execute (in this case 2 seconds).

Running the code will give you the following output:

Reading from closed channels

Consider the following code:

func main() {

willClose := make(chan int, 10)

willClose <- -1

willClose <- 0

willClose <- 1

<- willClose

<- willClose

<- willClose

close(willClose)

read := <- willClose

fmt.Println(read)

}

We create an int channel called willClose and write data to it without doing anything with it. We then close the willClose channel and try to read from it after having emptied it. Of course an empty channel will return zero.

Running the code will give you this output:

$ go run read_channel.go

0

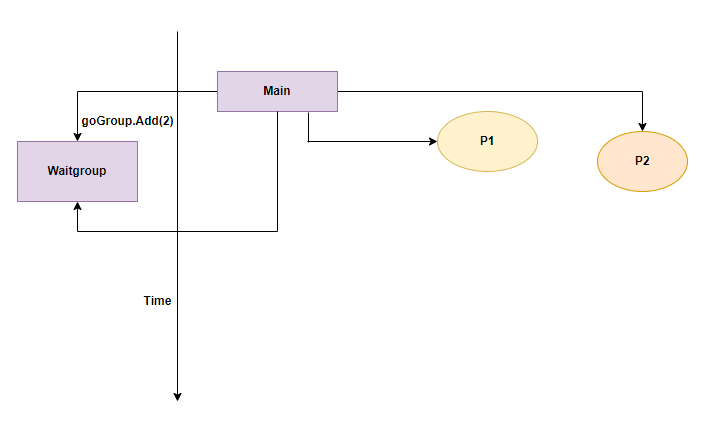

Waitgroups

Waitgroups exercise patience in goroutines. They ensure that goroutines run entirely before moving on with the application.

Let’s look at it in code to try and better understand it:

type Job struct {

i int

max int

text string

}

func textOutput(j *Job, goGroup *sync.WaitGroup) {

for j.i < j.max {

time.Sleep(1 * time.Millisecond)

fmt.Println(j.text)

j.i++

}

goGroup.Done()

}

func main() {

goGroup := new(sync.WaitGroup)

fmt.Println("Starting...")

hello := new(Job)

hello.text = "hello"

hello.i = 0

hello.max = 2

world := new(Job)

world.text = "world"

world.i = 0

world.max = 2

go textOutput (hello, goGroup)

go textOutput (world, goGroup)

goGroup.Add(2)

goGroup.Wait()

}

Let’s begin from the main function. Here, we declare a WaitGroup struct named goGroup. The variable will receive the output of the textOutput function. Our goroutine is completed x number of times before it exits. This time it’s 2 times as shown by the goGroup.Add(2) function. We specify 2 because we have two functions running asynchronously. If you try to specify the value as 3, you’ll get a deadlock error but if you had three goroutine functions and still called two, you might see the output of the third.

It’s not advisable to set this value manually as this is ideally handled computationally in range by calling goGroup.Wait().

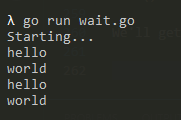

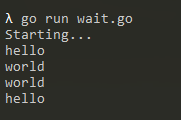

We’ll get the following output after running the code:

Concurrency can be powerful if applied well in your applications especially those dealing with heavy request loads, like web-crawlers.

Thank you for reading, until next time.